Faithful Summarization of German News

Over the past year, there has been remarkable progress in the field of text summarization especially due to the emergent abilities of the recent Large Language Models (LLMs). However, summarization models cannot yet ensure that summaries are factually consistent with the source and may fabricate information (i.e. hallucinate). In addition, most of the research focuses on English, relying on annotated data that is often not available in other languages. More recent works employ multilingual datasets, yet they do not include German. Overall, hallucination poses a major issue in journalism, as it is essential to provide the reader with accurate information. Particularly within the Swiss media industry, it is important to be able to detect and mitigate hallucination in German text. The goal of this project is to tackle hallucination with a special focus on German news summarization to encourage the research community to implement and evaluate approaches that also consider German.

Absinth: a German Dataset for Hallucination Detection

To foster research on hallucination in German summarization, the MTC built and open-sourced in collaboration with the University of Zurich the Absinth dataset, a manually annotated dataset for hallucination detection. The dataset consists of ~ 4,300 pairs of article and summary sentences and their corresponding label faithful, intrinsic, or extrinsic hallucination. While intrinsic hallucinations are counterfactual to the source article, extrinsic hallucinations contain information that cannot be verified against the source. The articles come from a publicly available dataset of 20 Minuten news articles, and the corresponding summaries are generated using multiple summarization models, including the state-of-the-art pre-trained language models for German summarization and the latest instruction-based LLMs such as OpenAI’s GPT-4 and the open-source model Llama 2 by Meta.

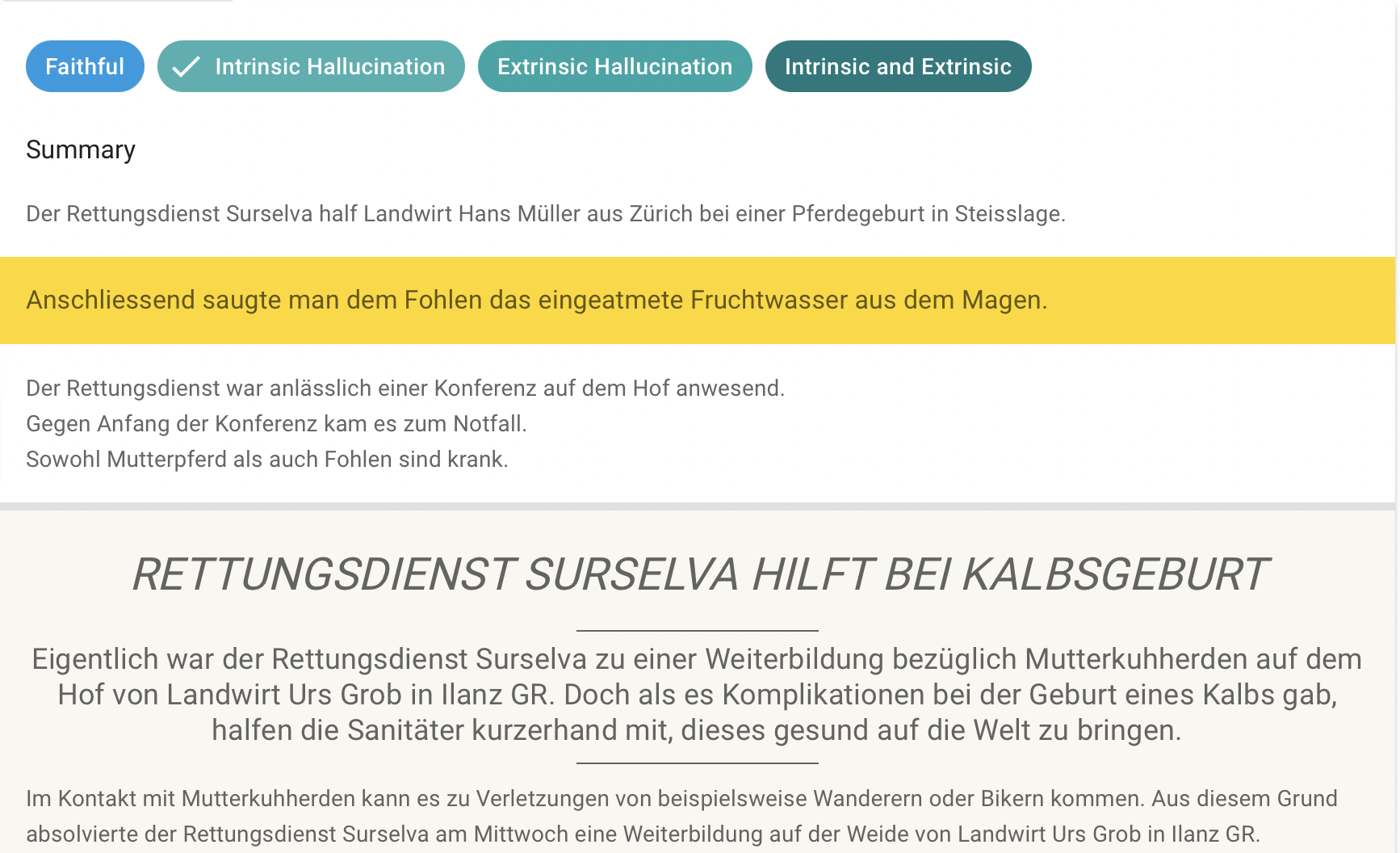

A team of 12 students from ETH Zurich and the University of Zurich performed the annotation task, achieving a substantial inter-annotator agreement of 0.77 Fleiss’ kappa score. To ensure high-quality annotations, participants attended an initial in-person training, and their performance was continuously assessed against a gold standard annotated by domain experts, allowing us to promptly provide them with clarifications on the annotation task if necessary. Additionally, they used an intuitive annotation interface that the MTC adapted to the task.

Absinth Dataset

A manually annotated dataset for hallucination detection in German news summarization. external page Find out more on GitHub

Inconsistency Detection with Absinth and LLMs

LLMs have recently come to the forefront in natural language processing, allowing for significant advancements in the field. These models, which are characterized by an augmented parameter scale, show a notable improvement in performance compared to well-established pre-trained language models like GPT-2.

Over the last year, the research community built and released multiple open-source LLMs. We therefore evaluate their performance at detecting hallucination using our Absinth dataset on multiple prompting approaches, such as fine-tuning, few-shot, or chain-of-thought prompting. In particular, we chose various model sizes of the Llama 2 family and Mistral, as they show state-of-the-art performance on different benchmarks. Since we focus on the German language, we also consider LeoLM models, which adapt the Llama 2 or Mistral models to German by further pretraining on German data. Our preliminary results show the strong abilities of chain-of-thought prompting, which enhances the model’s reasoning by providing a series of intermediate steps to generate the desired output, and confirm related work findings that the learning abilities of LLMs increase as the model scale becomes larger.

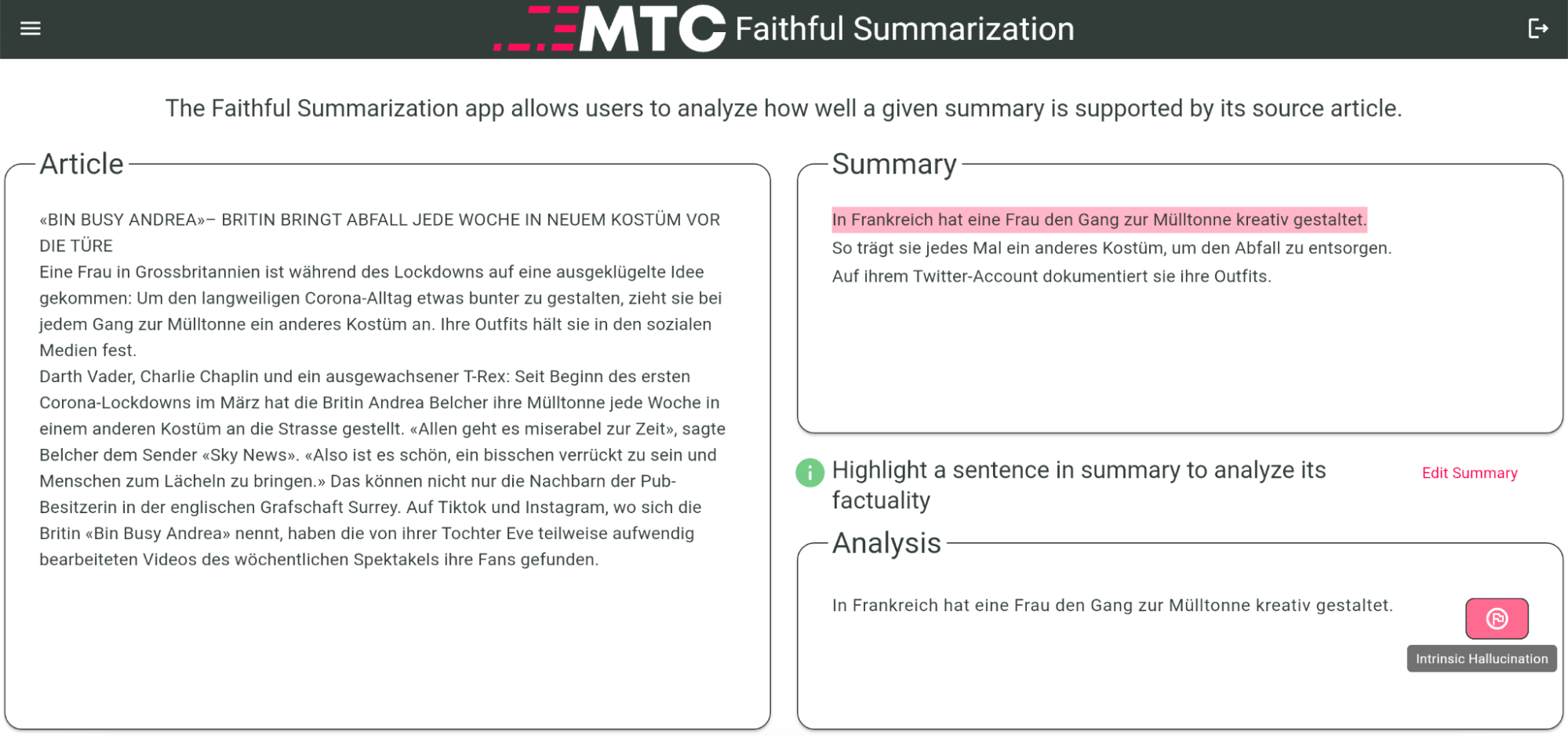

Project Demo Application

To support journalists in verifying the information from a given article and its corresponding summary, the MTC implemented and deployed a fact-aware demo application that uses our best-performing LLM model fine-tuned on chain-of-thought prompting. More specifically, the application allows users to automatically analyze the faithfulness of individual summary sentences against the source article and obtain more information on the type of hallucination if any.

Publications

Additional Project Resources

Resources for Industry Partners

Additional project resources for our industry partners are only available to registered users and can be found here.

Project Demo Applications

Project demos are hosted on our project demo external page dashboard. They are accessible by registered users only.